Feature #20855

closedIntroduce `Fiber::Scheduler#blocking_region` to avoid stalling the event loop.

Description

The current Fiber Scheduler performance can be significantly impacted by blocking operations that cannot be deferred to the event loop, particularly in high-concurrency environments where Fibers rely on non-blocking operations for efficient task execution.

Problem Description¶

Fibers in Ruby are designed to improve performance and responsiveness by allowing concurrent tasks to proceed without blocking one another. However, certain operations inherently block the fiber scheduler, leading to delayed execution across other fibers. When blocking operations are inevitable, such as system or CPU bound operations without event-loop support, they create bottlenecks that degrade the scheduler's overall performance.

Proposed Solution¶

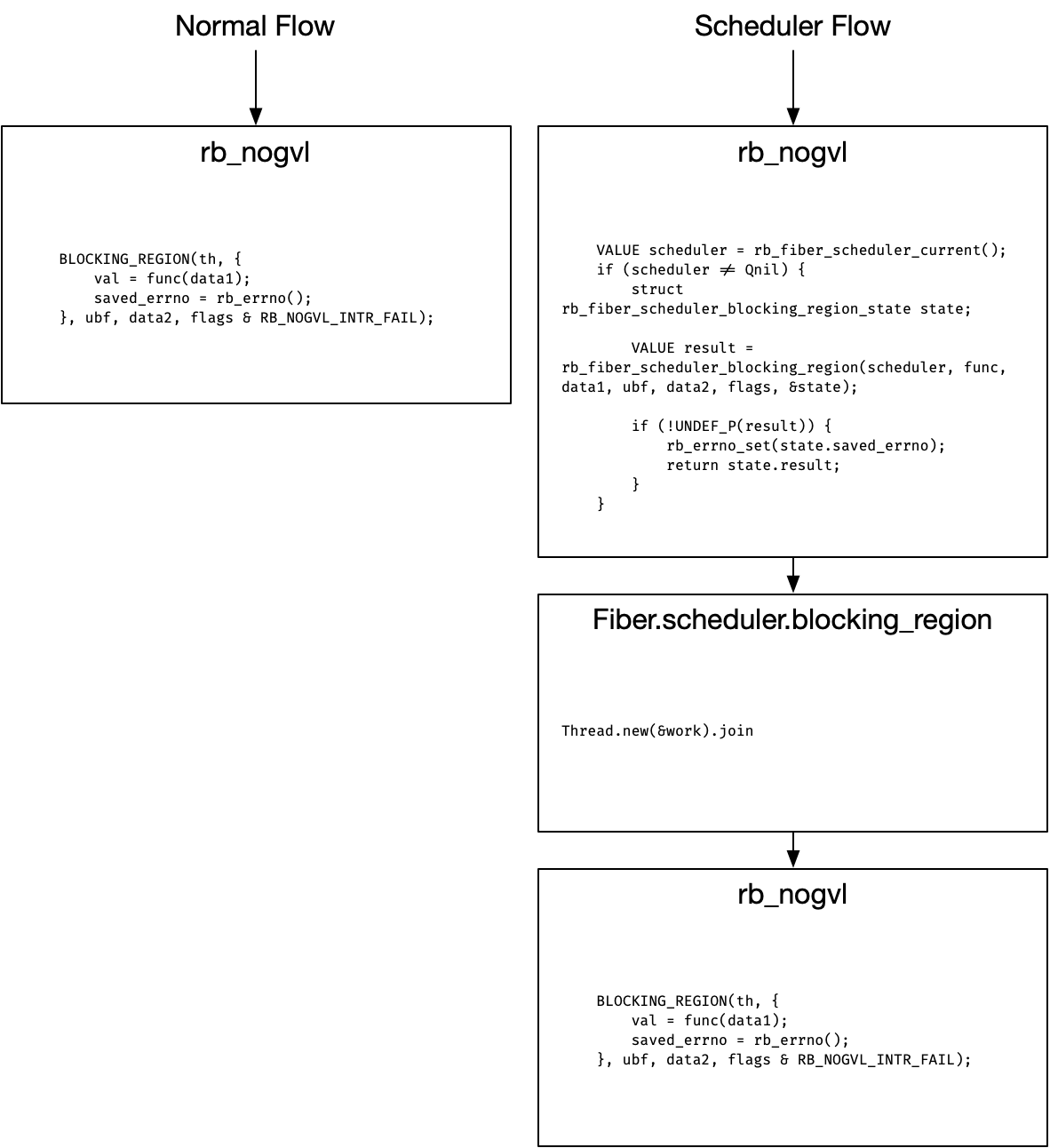

The proposed solution in PR https://github.com/ruby/ruby/pull/11963 introduces a blocking_region hook in the fiber scheduler to improve handling of blocking operations. This addition allows code that releases the GVL (Global VM Lock) to be lifted out of the event loop, reducing the performance impact on the scheduler during blocking calls. By isolating these operations from the primary event loop, this enhancement aims to improve worst case performance in the presence of blocking operations.

blocking_region(work)¶

The new, optional, fiber scheduler hook blocking_region accepts an opaque callable object work, which encapsulates work that can be offloaded to a thread pool for execution. If the hook is not implemented rb_nogvl executes as usual.

Example¶

require "zlib"

require "async"

require "benchmark"

DATA = Random.new.bytes(1024*1024*100)

duration = Benchmark.measure do

Async do

10.times do

Async do

Zlib.deflate(DATA)

end

end

end

end

# Ruby 3.3.4: ~16 seconds

# Ruby 3.4.0 + PR: ~2 seconds.

Files

Updated by ioquatix (Samuel Williams) over 1 year ago

Updated by ioquatix (Samuel Williams) over 1 year ago

- Description updated (diff)

Updated by ioquatix (Samuel Williams) over 1 year ago

Updated by ioquatix (Samuel Williams) over 1 year ago

- Description updated (diff)

Updated by ioquatix (Samuel Williams) over 1 year ago

Updated by ioquatix (Samuel Williams) over 1 year ago

- Description updated (diff)

Updated by ioquatix (Samuel Williams) over 1 year ago

Updated by ioquatix (Samuel Williams) over 1 year ago

- Description updated (diff)

Updated by ioquatix (Samuel Williams) over 1 year ago

Updated by ioquatix (Samuel Williams) over 1 year ago

- Status changed from Open to Closed

Updated by ioquatix (Samuel Williams) over 1 year ago

Updated by ioquatix (Samuel Williams) over 1 year ago

- Description updated (diff)

Updated by ko1 (Koichi Sasada) over 1 year ago

· Edited

Updated by ko1 (Koichi Sasada) over 1 year ago

· Edited

- Status changed from Closed to Open

I against this proposal because

- I can't understand what happens on this description. I couldn't understand the control flow with a given func for rb_nogvl.

- I'm not sure what is the

workforblocking_region()callback. - I don't want to introduce

blocking_region.

I want to revert this patch with current description.

Updated by Eregon (Benoit Daloze) over 1 year ago

Updated by Eregon (Benoit Daloze) over 1 year ago

In terms of naming, blocking_region sounds too internal to me.

I think the C API talks about blocking_function (rb_thread_call_without_gvl, rb_blocking_function_t although that type shouldn't be used) and unblock_function (rb_unblock_function_t, UBF in rb_thread_call_without_gvl docs).

It's also unclear if it's safe to call such blocking functions on different threads.

In general it seems unsafe.

Updated by ioquatix (Samuel Williams) over 1 year ago

· Edited

Updated by ioquatix (Samuel Williams) over 1 year ago

· Edited

I don't introduce blocking_region.

I am not strongly attached to the name, I just used blocking_region as it is quite commonly used internally for handling of blocking operations and has existed for 13 years (https://github.com/ruby/ruby/commit/919978a8d9e25d52697c0677c1f2c0ccb50b4492#diff-d5867d8e382e49f5cdef27a4d24c1a4588954f96e00925092a586659bf1b1ba4R204).

For alternative naming, how about blocking_operation? I also considered thread_call_without_gvl but I feel it's too specific as the scheduler doesn't need to care about what the work is - just that it is blocking, and some platforms like JRuby / TruffleRuby don't necessarily have the same concept of a GVL.

I'm not sure what is the work for blocking_region() callback.

The work is an opaque object that responds to #call (but in the implementation, it is a Proc instance) which in this specific case, wraps the execution of rb_nogvl with the given func and unblock_func. Here is the implementation of the proc (from the PR):

static VALUE

rb_fiber_scheduler_blocking_region_proc(RB_BLOCK_CALL_FUNC_ARGLIST(value, _arguments))

{

struct rb_blocking_region_arguments *arguments = (struct rb_blocking_region_arguments*)_arguments;

if (arguments->state == NULL) {

rb_raise(rb_eRuntimeError, "Blocking function was already invoked!");

}

arguments->state->result = rb_nogvl(arguments->function, arguments->data, arguments->unblock_function, arguments->data2, arguments->flags);

arguments->state->saved_errno = rb_errno();

// Make sure it's only invoked once.

arguments->state = NULL;

return Qnil;

}

I can't understand what happens on this description. I couldn't understand the control flow with a given func for rb_nogvl.

If rb_nogvl is called in the fiber scheduler, it can introduce latency, as releasing the GVL will prevent the event loop from progressing while nogvl function is executing. To avoid this, we wrap the arguments given to rb_nogvl into a Proc and invoke the fiber scheduler hook, so it can decide how to execute the work.

The most basic (blocking) implementation would be something like this:

def blocking_region(work)

Fiber.blocking(&work)

end

Alternatively, using a thread:

def blocking_region(work)

Thread.new(&work).join

end

In terms of flow control, it's the same as all the fiber scheduler hooks, it routes the operation to the fiber scheduler for execution. The scheduler is allowed, within reason, to determine the execution policy.

In general it seems unsafe.

It's a fair point - I found bugs in zlib.c because of this work, so for sure there exists problematic code... But I also don't want to be too reductionistic regarding "unsafe C code" being a problem...

One option to mitigate this risk is to introduce a flag passed to rb_nogvl to either allow or prevent moving func to a different thread. I think this has wider value too - in the M:N scheduler, knowing whether blocking operations can be moved between threads might be extremely useful for similar reasons. Depending on how risk-adverse we are, we could decide to default to "allow by default" or "prevent by default". I'm personally leaning more towards allow by default as I think most usage of rb_nogvl should be safe in practice, but I'd also be okay with being conservative by default. The only problem I see with being conservative by default is a lot of performance may get left on the table until code is updated to use said flags.

I want to revert this patch with current description.

Sorry @ko1 (Koichi Sasada), I was just excited to try this feature out. I've been running tests in async and other projects downstream using ruby-head to evaluate it. Why don't we discuss this at the developer meeting and figure out a path forward? If we still can't come to a conclusion we can revert it. How about that?

Updated by Dan0042 (Daniel DeLorme) over 1 year ago

Updated by Dan0042 (Daniel DeLorme) over 1 year ago

I also don't understand this patch. Zlib.deflate is a CPU-bound operation right? So it makes sense for Fibers of the same Thread to execute the 10 operations sequentially. That's what Fibers are about. If you want parallelism you need Threads or Ractors. It seems like this is transforming Fiber::Scheduler into a generic FiberAndOrThreadScheduler? That can't be right.

Updated by kjtsanaktsidis (KJ Tsanaktsidis) over 1 year ago

Updated by kjtsanaktsidis (KJ Tsanaktsidis) over 1 year ago

If rb_nogvl is called in the fiber scheduler, it can introduce latency, as releasing the GVL will prevent the event loop from progressing while nogvl function is executing

I definitely understand the problem here. But… dealing with a heterogenous mix of compute and IO operations is exactly what the M:N scheduler is for, right? In that design, a Ruby thread in a rb_nogvl block pins the native thread, but other Ruby threads can run on a different native thread.

I don’t think we want a second way for Ruby threads to be moved around between native threads. Should this be integrated with the M:N scheduler in some way?

IMHO I think it should be the responsibility of applications like falcon, etc to explicitly offload heavy work like zlib onto an application managed thread pool.

I wonder if another way to tackle this problem is to add some metrics or callbacks to the fiber scheduler API, so that e.g. async could warn you when the event loop is blocked for a long time and that you should consider pulling work out into a thread pool.

Updated by ioquatix (Samuel Williams) over 1 year ago

Updated by ioquatix (Samuel Williams) over 1 year ago

Zlib.deflate is a CPU-bound operation right? So it makes sense for Fibers of the same Thread to execute the 10 operations sequentially.

Yes, Zlib.deflate with a big enough input can become significantly CPU bound. Yes, executing that on fibers will be completely sequential and cause significant latency in the event loop. This proposal preserves the user visible sequentiality while taking advantage of thread-level parallelism internally to the scheduler. From the user's point of view, nothing is different (code still executes sequentially), but internally, the rb_nogvl callback in Zlib.deflate will not stall the event loop. As the hook is completely optional, the user-visible semantics of the scheduler must be identical with or without this hook.

IMHO I think it should be the responsibility of applications like falcon, etc to explicitly offload heavy work like zlib onto an application managed thread pool.

In a certain way, that's exactly what this proposal does: rb_nogvl provides the information we need to offload heavy work and we can take advantage of it. The goal of the fiber scheduler has always been to be transparent to the user/application/library code. So it's just a matter of where you set the bar for "explicitly" - native extensions can explicitly offload heavy work using rb_nogvl as that's actually the only way to define code that can run safely in parallel - and as you well know there is no similar construct in pure Ruby.

I wonder if another way to tackle this problem is to add some metrics or callbacks to the fiber scheduler API, so that e.g. async could warn you when the event loop is blocked for a long time and that you should consider pulling work out into a thread pool.

The original design of the fiber scheduler had this, it was called enter_blocking_region and exit_blocking_region: https://bugs.ruby-lang.org/issues/16786 but they were rejected as being too strongly connected to the implementation.

Knowing there are blocking operations on the event loop is extremely valuable, so I regret not having those hooks.

Should this be integrated with the M:N scheduler in some way?

For sure there is some overlap. However, my main concern is making the fiber scheduler is good as it can be, and blocking operations within the fiber scheduler/event loop is a reasonable criticism that has come up. So, I want a solution for the fiber scheduler. All things considered, the proposal here is a reasonable solution (pending naming and safety flags). It's about as simple as I could make it and the results show that it works pretty well - within the bounds of what's possible for parallelism in Ruby.

Updated by ioquatix (Samuel Williams) over 1 year ago

· Edited

Updated by ioquatix (Samuel Williams) over 1 year ago

· Edited

After discussing this with @ko1 (Koichi Sasada), we are going to (1) update the name to blocking_operation_wait and (2) introduce a flag to rb_nogvl to take a conservative approach to offloading work to the fiber scheduler. Once that's done I'll update the proposal to reflect the changes.

Updated by ioquatix (Samuel Williams) over 1 year ago

Updated by ioquatix (Samuel Williams) over 1 year ago

- Status changed from Open to Closed

Updated proposal: https://bugs.ruby-lang.org/issues/20876